Over the years, we have seen the emergence of Artificial Intelligence and its growth across different aspects of life. AI is the theory and development of computer systems that are able to perform tasks normally requiring human intelligence, such as decision-making, speech recognition and translation between languages.

Artificial Intelligence is building smart machines that can be able to think like humans and mimics their actions to solve complex problems. AI is used in the healthcare industry for faster and better diagnosis of diseases in lesser time than doctors, AI is also used in the transport industry with the emergence of self-driving cars, the creative and entertainment industry also use AI, as companies like Netflix and Disney use AI in their movie recommendation systems. Deep learning is a class of machine learning algorithms that uses multiple layers to progressively extract higher-level features from the raw input, it is an AI function that imitates the workings of the human brain in processing data and creating patterns for use in decision making utilizes structured and unstructured data, it structures algorithms in layers to create an artificial neural network that can learn and make intelligent unsupervised decisions. A practical example of deep learning is face recognition as it employs layers of neural networks that have been trained.

WHAT ARE GANs?

GANs(Generative adversarial networks) are used in generative modelling using deep learning methods like in convolutional neural networks. Generative modelling is an unsupervised learning task in ML that involves automatically discovering and learning patterns in data inputs in a way that the model can be used to generate or output new examples that could have been drawn from the original dataset. GANs make generative models to be able to generate realistic examples across a range of problem domains, most notably in image-to-image translation and generating realistic photos of objects and people, it can also generate realistic 3D models, videos and more. Yann LeChun, one of the fathers of deep learning said that “GAN is the coolest idea of ML in the last 20 years”

HOW DO GANs WORK?

A GAN can be trained to generate images from random noises. For example, we can train a GAN to generate digit images that look like hand-written digit images from the MNIST database. GANs generally has two parts in them, the generator and the discriminator. The generator creates the images while the discriminator classifies the images into real and fake. The generator training makes use of a bridge between the generator and the discriminator.

Neural networks work with inputs, we input data that we want, maybe to classify or make a prediction, but GANs always outputs entirely new data instances so GANs take in random noises as inputs, the generator then transforms this noise into meaningful output. With noise, GAN can produce a wide variety of data, sampling from different places in the target distribution. Experiments suggest that the distribution of the noise doesn’t matter much, so we can choose something that is easy to sample from, as a uniform distribution. For convenience, the space from which the noise is sampled is usually of a smaller dimension than the dimensionality of the output space.

In training a neural network, we alter the network’s weight to reduce the loss of its output. The generator feeds into the discriminator and the discriminator produces the output we’re trying to affect. The generator loss penalizes the generator for producing a sample that the discriminator network.

VISUALIZING A SONG

As a visualizer plays a song file, it reads the audio data in very short time slices (usually less than 20 milliseconds). The visualizer does a Fourier transform on each slice, extracting the frequency components, and updates the visual display using the frequency information. An example is the Audiomack app visualizer. How the visual display is updated in response to the frequency info is up to the programmer. Generally, the graphics methods have to be extremely fast and lightweight in order to update the visuals in time with the music (and not bog down the PC). In the early days (and still), visualizers often modified the colour palette in Windows directly to achieve some pretty cool effects. One characteristic of frequency-component-based visualizers is that they don’t often seem to respond to the “beats” of music (like percussion hits, for example) very well. More interesting and responsive visualizers can be written that combine the frequency-domain information with an awareness of “spikes” in the audio that often correspond to percussion hits.

THE DEEP MUSIC VISUALIZER AND BigGAN

BigGAN is a new technology from the GANs family, BigGAN was created in 2018 at Google by Brock et al. BigGAN is considered big because it contains over 300million parameters trained on hundreds of google TPUs at the estimated cost of $60,000 and the result of this is an AI model that generates images from 1128 input parameters. Here, interpolation between classes without changing the noise vector reveals shared features in the latent space like faces, interpolating between random vectors reveals deeper structures. Apps like artbreeder have provided simple interfaces for creating AI artworks. The deep music visualizer was built by Matt Siegelman, it is an open-source tool for navigating the latent space with sound. To use the deep music visualizer, one will first have to clone the GitHub repo and follow the installation guide at %[github.com/msieg/deep-music-visualizer]. This requires your python programming skills Then run this command at the directory in your terminal:

python visualize.py — song theNameOfUrSong.mp3

Note: Use the name of the song you want to visualize, having the .mp3 extension.

The deep music visualizer syncs pitch with the class vector, volume and tempo with the noise vector, so that pitch controls the objects, shapes and texture in each frame, while volume and tempo control movement between frames. At each time point in the song, a chromagram of the twelve chromatic notes determines the weights of up to 12 ImageNet classes in the class vector. BigGAN is big and slow, running a program on a normal laptop will take about 8hours to run. But with a resolution of 128x128, It should take about 25minutes and to run on a 128 by 128 resolution, you can use the command:

python visualize.py — song theNameOfUrSong.mp3 — resolution 128

But one can, however, generate high-resolution images/videos by launching a virtual GPU on Google Cloud to speed up runtime from 8hours to a few minutes, but the virtual GPU isn’t free and costs $1/hour but Google awards new users with $300 in credit. Talking about video durations, it can be useful to generate shorter videos to limit runtime while testing some other input parameters. The pitch sensitivity is the sensitivity of the class vector to changes in pitch. At higher pitch sensitivity, the shapes, textures and objects in the video change more rapidly and adhere more precisely to the notes in the music. The tempo sensitivity is the sensitivity of the noise vector to changes in volume and tempo. Higher tempo sensitivity yields more movement.

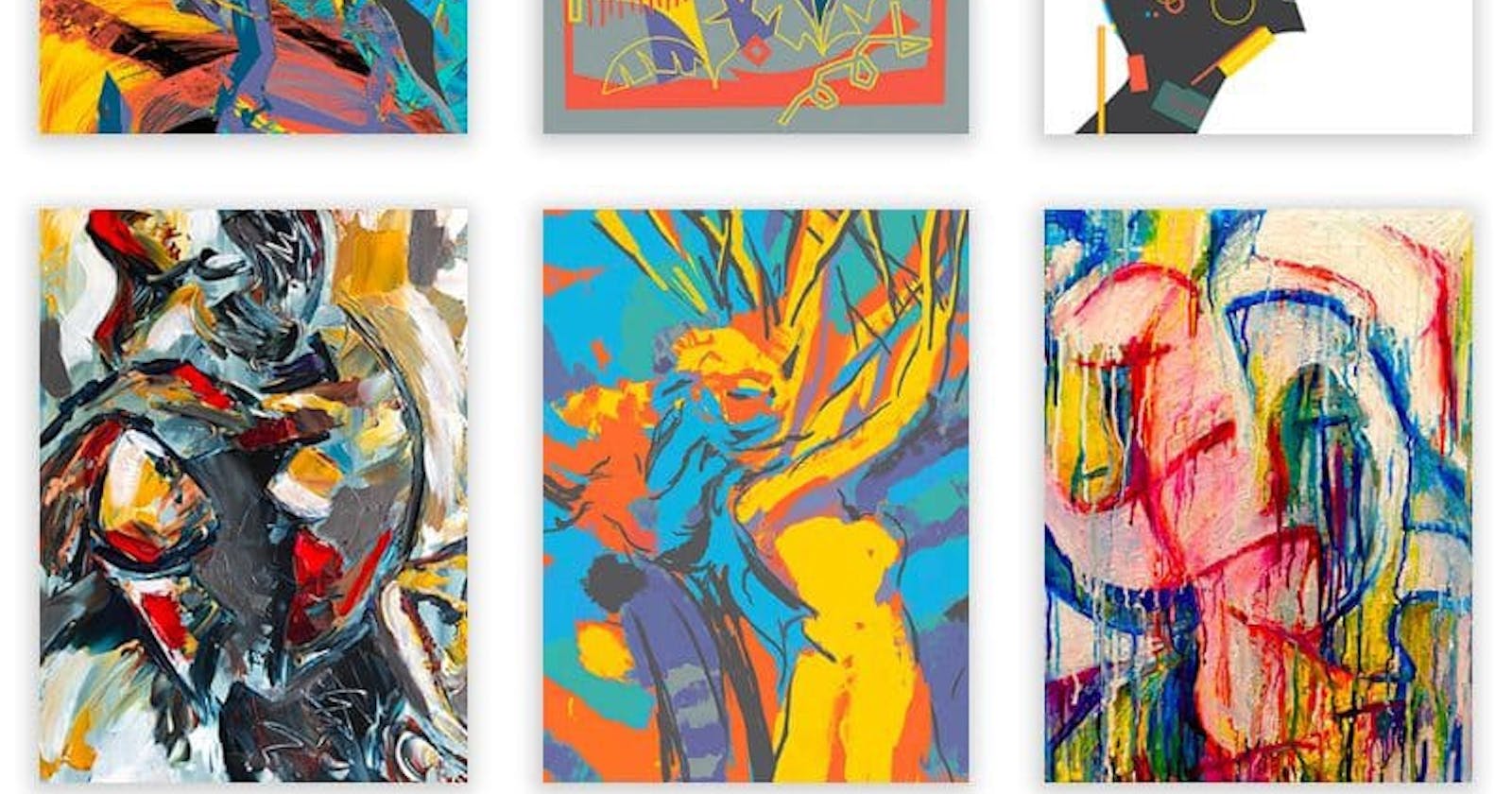

Picture Examples of visualized songs

Follow me 🤭 I’m on Twitter